Robust Estimation for the Erdős-Rényi Model

Final project for CSCI 2952Q: Robust Algorithms in Machine Learning

This project was completed as part of the course CSCI 2952Q: Robust Algorithms in Machine Learning at Brown University. We study the problem of estimating the edge formation probability $p$ in an Erdős-Rényi random graph under adversarial perturbations.

We introduce the $(q, \epsilon)$-adversarial model, which generalizes existing frameworks by allowing varying levels of adversarial knowledge (oblivious to omniscient) and control over a fraction $\epsilon$ of corrupted vertices. Within this setting, we propose new algorithms that achieve improved performance in both runtime and robustness.

Read our report

Methods #

Mean-Adjusted Median #

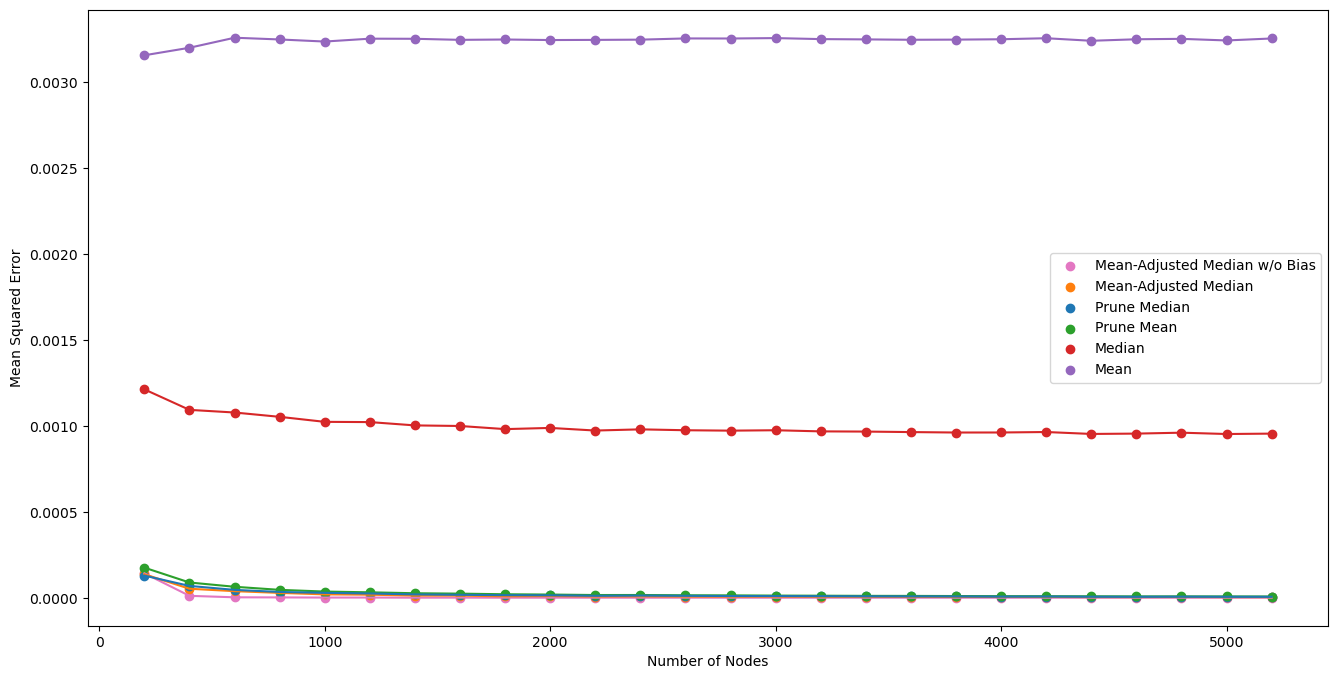

This estimator is based on the observation that the median of the degree distribution is more robust to outliers than the mean in the presence of an oblivious adversary. By comparing the median and mean of the degree distribution, and applying a corrective adjustment, we estimate $p$ in $O(n)$ time with provable error guarantees:

$$ |p - \hat{p}| \leq O(n^{-1/4}) \quad \text{with high probability}. $$

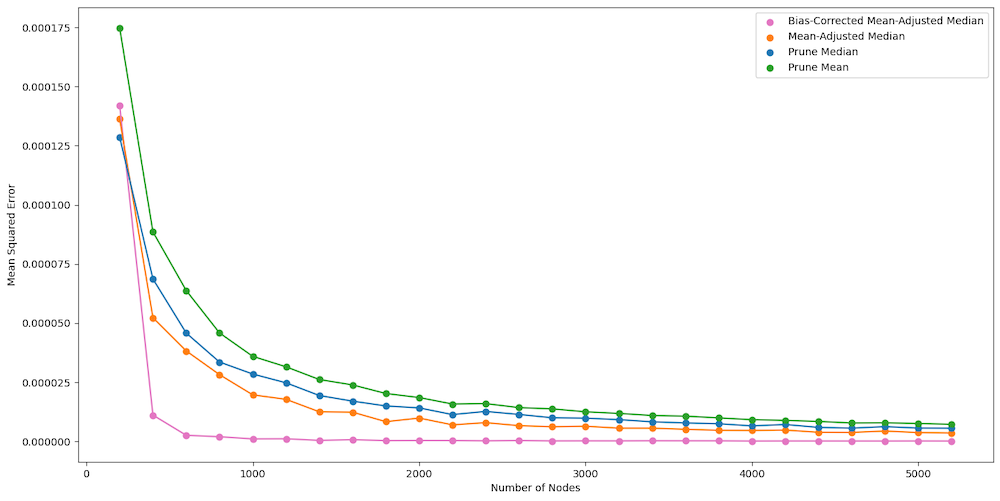

Bias-Corrected Mean-Adjusted Median #

We extend the previous method to account for a bias term that depends on $n$, $\epsilon$, $p$, and $q$. The corrected estimator removes this bias without requiring knowledge of $p$ or $q$, and retains the same linear runtime.

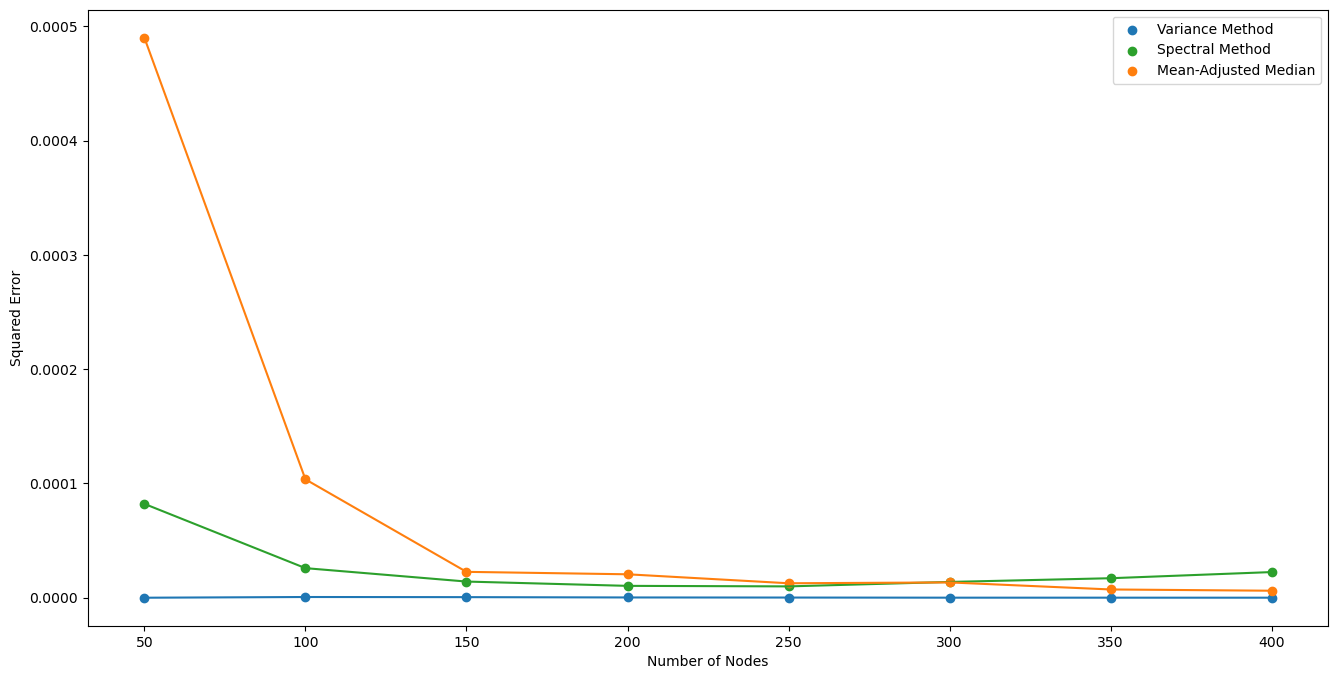

Variance-Based Filtering #

We propose an iterative filtering algorithm that removes corrupted vertices based on their contribution to the deviation in empirical degree variance. The algorithm identifies uncorrupted subgraphs where the degree distribution better matches the expected binomial variance. This method has runtime $O(\epsilon n^3)$, and performs well in practice. Theoretical guarantees remain a direction for future work.

Input: Laplacian matrix $L$, parameter $\epsilon$

Output: Filtered vertex set $V$

Let $n \gets$ number of vertices

Initialize $V \gets$ set of all vertices

For $t = 0$ to $\epsilon n - 1$:

- For each vertex $v \in V$:

- Compute the subgraph $G_{V \setminus {v}}$ by removing vertex $v$ from $V$

- Compute and store the value

$$ \left| s^2_{G_{V \setminus {v}}} - \hat{\sigma}^2_{G_{V \setminus {v}}} \right| $$

- Find

$$ x \gets \arg\max_{v \in V} \left| s^2_{G_{V \setminus {v}}} - \hat{\sigma}^2_{G_{V \setminus {v}}} \right| $$ - Update vertex set:

$$ V \gets V \setminus {x} $$

- For each vertex $v \in V$:

Return $V$

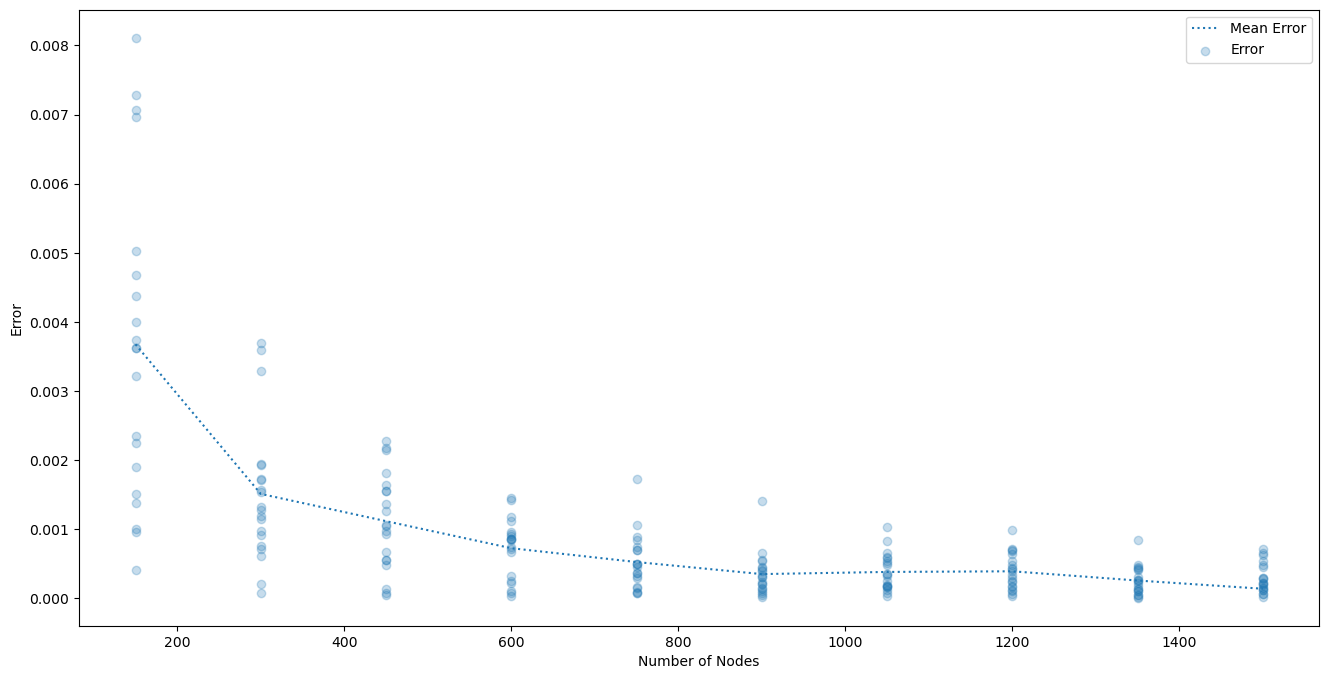

Empirical Results #

Comparison of Methods #

| Method | Runtime | Authors |

|---|---|---|

| Mean/Median | $O(n)$ | Acharya et al. |

| Prune then Mean/Median | $O(n \log(\epsilon n))$ | Acharya et al. |

| Spectral Method | $\tilde{O}(\epsilon n^3)$ | Acharya et al. |

| Mean-Adjusted Median | $O(n)$ | Lee et al. |

| Bias-Corrected M.A.M. | $O(n)$ | Lee et al. |

| Variance Method | $O(\epsilon n^3)$ | Lee et al. |

Repository #

The full codebase, including implementation and experiments, is available here:

The repository contains:

algos.py: Implementation of robust estimation methods.var_adversary.py: Code for simulating adversarial perturbations.figures.ipynb: Reproducible plotting scripts.report/: LaTeX sources for the paper.

This work contributes novel algorithms to the problem of robust estimation in random graphs, and provides both theoretical analysis and practical validation.